Introduction to Bad Bots

Bad bots are automated software programs designed to perform malicious or harmful tasks on the internet. These activities pose significant threats against organisations or individuals by compromising cybersecurity and thereby eroding trust.

Bad bots typically exploit vulnerabilities in online systems and applications for personal gain, financial profit, or any other malicious intent.

Bad bots can be used to launch attacks on pretty much every industry. That said, industries where there are high volumes of financial transactions such as e-commerce are targeted the most.

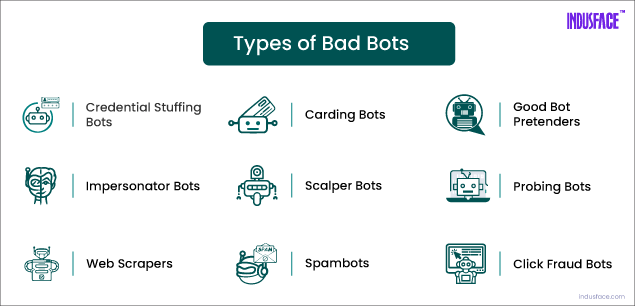

Types of Bad Bots

The landscape of bad bots is constantly evolving with new variations and attacks. That said, here are the most commonly used bad bots.

1. Credential Stuffing/Account Takeover Bots

These bots automate the process of testing large numbers of stolen usernames and passwords on various websites to gain unauthorized access to user accounts. They exploit the common practice of password reuse across multiple online services. Once a valid combination is found, the bot can access the account for malicious purposes such as identity theft, fraud, or further credential harvesting.

Credential stuffing bots are dangerous as they give bots access to the systems directly. These could be more dangerous where there are also privilege-escalation vulnerabilities and a user account that is compromised is potentially used to access the admin accounts.

Check out these tips to prevent from Credential Stuffing Attack.

2. Carding Bots

Carding bots are used to test stolen credit card information for validity and make fraudulent transactions online. These bots automate the process of entering card details, expiration dates, and CVV codes on e-commerce websites to purchase goods or services using stolen payment credentials.

Carding bots often use techniques such as spoofing, session hijacking, IP rotation, CAPTCHA solving and more to evade detection by fraud detection systems.

Gain more insights into carding attacks and preventive measures.

3. Good Bot Pretenders

Bad bots can modify their user-agent strings to mimic those of popular search engine crawlers or other legitimate bots. By presenting themselves as well-known bots like Googlebot or Bingbot, they can bypass security measures that whitelist known good bots while blocking unknown or suspicious user agents.

Bad bots may also spoof their IP addresses to make it appear as if their requests are coming from legitimate sources, such as reputable hosting providers or data centers. This can help them avoid IP-based blocking or rate-limiting mechanisms used by websites to deter malicious bot traffic.

4. Impersonator Bots

Impersonator bots mimic human behavior to impersonate legitimate users on websites, social media platforms, or messaging apps. They may create fake accounts, interact with other users, or engage in automated conversations.

Impersonator bots can be used for various purposes, including spreading misinformation, manipulating online discussions, or influencing public opinion.

5. Scalper Bots

Scalper bots are used to automate the purchase of limited-release or high-demand products from e-commerce websites, ticketing platforms, or online auctions. They work by repeatedly sending requests to purchase products as soon as they become available, often bypassing rate limits and security measures.

Scalper bots can quickly deplete inventory and resell products at inflated prices on secondary markets.

6. Probing Bots

Probing bots are programmed to scan websites, servers, or network devices for vulnerabilities. They can exploit known security flaws to gain unauthorized access, inject malicious code, or steal sensitive data.

These bots are typically used to find vulnerabilities first and then use other bots to exploit the vulnerabilities.

7. Web Scrapers

These bots are designed to automatically extract data from websites, often without permission. They work by sending HTTP requests to target websites, parsing the HTML content, and extracting specific information such as product details, prices, or contact information.

Web scrapers can be used for legitimate purposes, but when used without authorization, they can drain website resources and more importantly steal IP and be used to gain unfair competitive advantage. They could also be used in conjunction with other bots such as scalper bots to cause inventory stock outs.

8. Spambots

Spambots are bots that generate and distribute spam emails, social media messages, or comments. They often operate by harvesting email addresses or social media profiles from public sources or compromised databases.

Spambots can flood inboxes and social media feeds with unsolicited messages promoting scams, phishing links, or fake products/services.

9. Click Fraud Bots

Click fraud bots simulate human clicks on online advertisements, pay-per-click ads, or affiliate links to generate revenue for bot operators while defrauding advertisers.

They can inflate website traffic and click counts artificially, leading to inaccurate analytics data and wasted advertising budgets. Click fraud bots may use proxies or distributed networks to evade detection.

The Impact of Bad Bots

Overall, the impacts of bad bot attacks can be far-reaching and multifaceted, affecting not only the targeted organization but also its customers, partners, and the broader digital ecosystem. Here are some of the most important impacts:

1. Financial Losses

Bad bot attacks can result in significant financial losses for businesses due to stolen revenue, fraudulent transactions, increased operational costs for mitigating the attack, and potential fines or legal fees resulting from regulatory violations.

One notable example of a company that suffered financial losses due to bad bots is Ticketmaster, a leading ticket sales and distribution company. The attack involved a group of scalper bots, operated by a third-party organization known as “Songkick,” which exploited vulnerabilities in Ticketmaster’s online ticketing system to bypass security measures and purchase large quantities of tickets for popular events. These tickets were then resold on secondary markets at inflated prices, defrauding both Ticketmaster and its customers.

2. Damage to Reputation

Bad bot attacks can damage the reputation and trustworthiness of businesses and organizations. Customers may lose confidence in the security of the platform, leading to decreased user engagement, customer churn, and long-term damage to brand loyalty.

One example of a company that suffered reputational damage due to bad bots is Twitter. Twitter faced widespread criticism for its failure to effectively combat the spread of bots and fake accounts during the U.S. presidential election campaign. It was reported that numerous automated bot accounts were used to amplify political propaganda, manipulate public opinion, and disseminate misleading information, leading to concerns about the integrity of the electoral process and the role of social media platforms in shaping public discourse.

3. Data Breaches

Bad bot attacks can lead to data breaches and the exposure of sensitive information, including customer data, payment details, intellectual property, and trade secrets. This can result in regulatory penalties, legal liabilities, and damage to the affected organization’s reputation.

Data breaches happen mostly because of open vulnerabilities such as an SQL Injection on the website or API. There are many examples of data breaches. Since these vulnerabilities are tough to discover, most likely these breaches happen after hackers use a probing bot to find open vulnerabilities.

4. Disruption of Operations

Bad bot attacks can disrupt the normal functioning of websites, applications, and online services, leading to downtime, degraded performance, and loss of productivity for users and employees. This can have cascading effects on business operations and revenue generation.

Bot-driven DDoS attack is the most common method to disrupt operations. WireX botnet, for example, utilized a network of compromised Android devices to launch a series of DDoS attacks against content delivery networks (CDNs), online gaming services, and other internet infrastructure providers including AWS.

5. Negative SEO Impact

Bad bots engaging in web scraping or content scraping can negatively impact a website’s search engine optimization (SEO) efforts by duplicating content, diluting keyword relevance, and causing indexing issues. This can result in decreased search engine rankings, reduced organic traffic, and diminished online visibility.

6. Wasted Resources

Bad bot attacks consume valuable server resources, bandwidth, and computing power, leading to increased hosting costs, slower website performance, and reduced scalability. This can strain IT infrastructure and impede the delivery of services to legitimate users.

7. Legal and Regulatory Consequences

Bad bot attacks may violate laws, regulations, or industry standards related to data privacy, cybersecurity, and consumer protection. Organizations found to be non-compliant may face regulatory investigations, fines, and legal action from affected parties or regulatory authorities.

8. Loss of Competitive Advantage

Bad bot attacks targeting competitors can undermine their competitive advantage by stealing intellectual property, proprietary data, or business intelligence. This can lead to loss of market share, decreased profitability, and weakened competitiveness in the marketplace.

9. Social and Ethical Implications

Bad bot attacks can have broader social and ethical implications, including the spread of misinformation, manipulation of public opinion, and erosion of trust in digital platforms. This can undermine the integrity of democratic processes, public discourse, and societal norms.

Detection and Prevention Techniques

With the rise of automated threats, detecting and mitigating bad bot activities is essential for organizations to safeguard their applications, data, and user interactions. Attackers use bots for credential stuffing, data scraping, spam, and DDoS attacks, making it crucial to deploy effective countermeasures. Below are key techniques for detecting and preventing bad bots.

Detection Techniques for Bad Bots

- CAPTCHA Challenges – Completely Automated Public Turing tests to tell Computers and Humans Apart (CAPTCHAs) are widely used to differentiate between real users and bots. By requiring users to solve puzzles, image recognition tasks, or one-click verifications, CAPTCHAs disrupt automated scripts that cannot pass these challenges.

- Honeypots – These are hidden form fields or deceptive pages designed to detect and trap malicious bots. Since legitimate users cannot see or interact with honeypots, any engagement with these elements signals bot activity, allowing organizations to block such requests.

- Behavioral Analysis – Advanced bot detection systems analyze user behavior, such as mouse movements, keystroke patterns, navigation speed, and interaction frequency. Bots often exhibit unnatural behaviors—such as excessively fast clicks or lack of cursor movement—that can be flagged as suspicious.

- Traffic Pattern Monitoring – Identifying anomalies in traffic patterns, such as a sudden spike in requests from a single IP or repeated logins in quick succession, helps detect bot-driven attacks. Machine learning models can refine detection by analyzing historical data and recognizing evolving bot behaviors.

Prevention Techniques for Bad Bots

- User Agent and IP Reputation Analysis – Security tools can block known bad user agents and IP addresses associated with botnets or malicious activities. A continuously updated reputation database ensures that threats are proactively mitigated.

- Multi-Factor Authentication (MFA) – Requiring users to verify their identity through an additional authentication step, such as SMS codes or biometric verification, significantly reduces the risk of automated login attacks.

- JavaScript and Device Fingerprinting – Advanced bot mitigation tools use JavaScript-based detection and device fingerprinting to assess browser integrity, screen resolution, installed plugins, and other device attributes. Bots that fail these validation checks are blocked.

- Web Application Firewall (WAF) with Bot Mitigation –With bot mitigation capabilities, WAFs use behavioral analysis, rate limiting, and machine learning to differentiate between real users and automated threats. AI/ML-driven WAFs continuously learn from traffic patterns, improving their ability to detect and mitigate evolving bot threats with higher accuracy.

Key Ways WAFs Prevent Bot Attacks

- Traffic Filtering & Anomaly Detection – Monitors incoming requests to detect unusual behavior, such as rapid login attempts or high-frequency API calls.

- Bot Signature & Behavioral Analysis – Identifies bots based on known attack patterns, header inconsistencies, and non-human interaction behaviors.

- Rate Limiting – Restricts excessive requests from a single source to prevent credential stuffing, scraping, and brute-force attempts.

- DDoS Protection – Identifies and blocks botnets attempting to overwhelm the system with fake traffic.

- Custom Security Rules – Enables businesses to fine-tune bot defense based on risk level, traffic intent, and industry-specific threats.

- Advanced Bot Control – Uses JavaScript challenges, browser fingerprinting, and CAPTCHA enforcement to detect stealthy bots trying to bypass detection.

- Threat Intelligence – Leverages real-time data from global security networks to proactively block emerging bot threats.

To understand more about how WAFs function as a critical security layer, check out our detailed blog on How Does a WAF Work?.

Regulatory and Legal Considerations

The legal landscape surrounding bad bots is complex and constantly evolving, with laws and regulations varying by jurisdiction and industry. If your company suspects some bot activity, the following laws and regulations maybe critical for taking the next steps:

Computer Fraud and Abuse Act (CFAA)

In the United States, the Computer Fraud and Abuse Act (CFAA) is a federal law that prohibits unauthorized access to computer systems and networks. Bad bots that gain access to protected computer systems without authorization may be subject to prosecution under the CFAA. The CFAA has been used in cases involving unauthorized access, hacking, and data breaches perpetrated by bad actors, including bot operators.

Data Protection and Privacy Regulations

Bad bots that collect personal or sensitive information from websites without consent may violate data protection and privacy regulations, such as the GDPR (General Data Protection Regulation) in the European Union or the CCPA (California Consumer Privacy Act in the United States). These regulations impose strict requirements on the collection, processing, and handling of personal data and may subject bad bot operators to fines, penalties, and legal liabilities for non-compliance.

Copyright and Intellectual Property Laws

Bad bots that scrape copyrighted content or proprietary information from websites without authorization may infringe on the intellectual property rights of the website owners. Copyright laws protect original works of authorship, including text, images, and multimedia content, from unauthorized reproduction or distribution.

Anti-Competitive Practices and Unfair Competition Laws

Bad bots that engage in anti-competitive practices, such as price scraping, market manipulation, or deceptive advertising, may violate anti-trust laws and unfair competition statutes. Companies harmed by these practices may pursue legal action under anti-trust laws, consumer protection laws, or unfair competition statutes to seek damages, injunctive relief, or other remedies.

Botnet Regulation and Cybersecurity Laws

Botnets, networks of compromised computers or devices controlled by malicious actors, are subject to regulation under cybersecurity laws and regulations aimed at preventing cyber-attacks, data breaches, and other malicious activities. Governments may enact legislation and regulatory frameworks to combat botnets, enhance cybersecurity, and protect critical infrastructure from cyber threats posed by bad bots.

Check out the 10 Botnet Detection and Removal Best Practices

Case Studies of Bad Bot Attacks Blocked by AppTrana WAAP

Botnet-Driven Low Rate DDoS attack on a Fortune 500 Company

A Fortune 500 commodities trader was hit by a botnet driven DDoS attack from 8 million+ IPs where the attack traffic was 14000X the usual traffic.

Learn how AI-powered, fully managed WAAP, AppTrana was used to ensure 100% availability. Read the complete case study here.

Carding Bot Attack on a US Jewellery Retailer

A large US jeweller was facing a lot of card cracking attacks and the attackers could even place up to fifteen high ticket orders with an attack.

Learn how 16,000 such attacks were mitigated 100% within a few hours. Download the case study here.

Future Trends and Emerging Threats

The future of bad bot development and evolution is likely to be characterized by increased sophistication, diversification of attack vectors, and ongoing cat-and-mouse game between attackers and defenders.

Three most prominent emerging threats include:

1. Botnet-as-a-Service

DDoS as a service platforms are already plentiful on the Dark Web. These platforms are evolving to become botnet-as-a-service (BaaS) platforms. While compute power is already cheap, with the emergence of AI and LLM, hackers can now easily rent let’s say an account-take-over bot or a scalping bot.

This will make it easier for novice hackers to orchestrate sophisticated attacks without specialized technical knowledge.

2. IoT Botnets

Mirai botnet was among the most notorious IoT botnets and this was discovered way back in 2016. That said, nowadays even the most rudimentary electrical devices such as bulbs are IoT enabled. This is a ripe opportunity for hackers because of widespread adoption, lack of security controls, and always-on connectivity. Once infected, these devices maybe used to launch large-scale DDoS attacks, distribute malware, or carry out other malicious activities.

3. Increased Sophistication

Bad bots are likely to become more sophisticated and adaptive, leveraging artificial intelligence (AI), machine learning (ML), and natural language processing (NLP) techniques to mimic human behavior, evade detection, and bypass security measures. These advanced bots may be capable of learning and evolving in real-time to counteract defensive measures deployed by website owners and security professionals.

Conclusion

According to our study, state of application security, bot attacks have increased by 147% in the last year and nine out of ten sites are hit by bot attacks every single day.

Given the versatile nature of these attacks, the continued sophistication and, emergence of BaaS platforms, it is important for security teams to invest in AI-powered, fully managed bot mitigation platforms such as AppTrana.