Humans, bots, and applications may overuse or abuse a web property, intentionally or unintentionally, eroding network resources and causing it to crash, face downtime or become slow. Rate limiting is an effective strategy to prevent the overuse or abuse of digital assets and certain kinds of web attacks.

What exactly is rate limiting, how does it work, and what can it protect against? Read on to find out.

What is Rate Limiting?

Rate limiting is a technique used to control the frequency of requests sent to an API, server, or application within a specific period. It ensures that users, bots, or clients cannot exceed predefined thresholds, protecting backend resources from overload and preventing abuse.

At its core, rate limiting answers the question:

“How many requests can this client make in a certain time window without harming system performance or other users?”

Purposes

Rate limiting is typically used to balance the loads on servers and network infrastructure while optimizing the performance of system resources. It prevents attackers from overwhelming digital resources and ensures that all legitimate users get equal access to the service.

Rate limiting is also useful in managing the information flow between complex linked systems, allowing seamless and intelligent merge of multiple streams into the devices. In addition to performance optimization, it also helps optimize costs by setting limits on resource use.

For instance, a user may mistakenly request the user to retrieve tons of information. This will overload the network for all users and require lots of computing resources. Such kind of vulnerabilities and errors can be prevented when rate limits are in place, and massive computation costs can be avoided.

What Does Rate Limiting Protect Against?

Without rate limiting, web applications and APIs are exposed to uncontrolled traffic surges that can degrade performance, cause downtime, or open the door to exploitation. While it is not a standalone security measure, rate limiting plays a crucial role in mitigating several common web threats:

Brute-Force and Credential-Stuffing Attacks: Attackers often attempt thousands of login attempts in seconds to guess valid credentials. Rate limiting restricts how frequently login or authentication requests can be made from a single IP or account, significantly reducing the success rate of such attacks.

Bot and Scraper Activity: Automated bots can overload servers or extract large volumes of data. By capping request rates, rate limiting helps slow or block scraping attempts, API enumeration, or content harvesting that could expose sensitive business information.

API Misuse and Overconsumption: APIs are prone to overuse and abuse, whether from automated bots, misconfigured clients, or malicious actors. Unchecked requests can overwhelm servers, slow down responses, or exhaust critical resources, a risk known as API4:2023 – Unrestricted Resource Consumption in the OWASP API Security Top 10. Rate limiting addresses this by controlling request frequency, enforcing quotas, and ensuring fair access for all clients. When combined with behavioral monitoring or adaptive throttling, it helps distinguish legitimate traffic from abusive activity, maintaining both performance and security.

Application-Layer DDoS Attacks: Unlike volumetric DDoS attacks that target network bandwidth, application-layer DDoS attacks flood endpoints with legitimate-looking HTTP requests. Rate limiting curbs these request bursts by applying per-source or per-endpoint limits, reducing the strain on web servers and maintaining availability.

By regulating request flow, rate limiting acts as a traffic governor, shielding infrastructure and applications from both intentional abuse and accidental overload.

How Does Rate Limiting Work?

Rate limiting is typically implemented at the application level rather than inside the web server itself. The application monitors the rate of incoming requests, most commonly using IP addresses, API keys, or user identifiers, to determine who or what is making those requests.

Typically, the security or rate limiting solution will track IP addresses from where the requests originate and evaluate the time gap between consecutive requests. Based on this information, thresholds are set and adjusted to define how many requests are permitted within a specific timeframe. For example, “100 requests per minute” or “3 login attempts per account.”

When a requester exceeds this predefined limit, the solution enforces control. It can block further requests from that IP address, introduce a delay before processing new ones, or return an HTTP 429 (“Too Many Requests”) response. In some cases, access may be temporarily suspended until the time window resets.

For example, consider a user trying to log in to an online banking portal. If the user repeatedly enters the wrong password, rate limiting ensures that after a set number of failed attempts – (say, three) the system automatically denies further logins and temporarily freezes the account. This prevents brute-force attacks while also signaling to legitimate users that the account needs manual verification before being unlocked.

By continuously tracking, enforcing, and resetting request thresholds, rate limiting keeps applications responsive for legitimate users and shields backend systems from overload or abuse.

Static vs Adaptive Rate Limiting

Rate limiting strategies differ in how dynamically they respond to changing traffic conditions. The two primary approaches, such as static and adaptive rate limiting serve distinct purposes depending on the environment and threat landscape.

1. Static Rate Limiting

Static rate limiting relies on fixed thresholds that define how many requests a client can make within a specific time window. These limits are manually configured based on factors like expected traffic volume, system capacity, or business rules.

For example, an application might permit 100 requests per minute per IP or 5 failed login attempts per account. Once this limit is reached, the system blocks or delays further requests until the time window resets.

This approach is simple, predictable, and effective in stable environments with consistent traffic. It helps prevent brute-force attempts, scraping, and accidental server overloads.

However, static thresholds can become too rigid in dynamic conditions. Legitimate surges such as during product launches, flash sales, or seasonal campaigns may be mistaken for attack spikes, triggering false positives. On the other hand, lenient settings can leave systems vulnerable during sudden threat bursts. As a result, static rate limiting demands ongoing tuning and monitoring to stay effective.

2. Adaptive Rate Limiting

Adaptive rate limiting evolves beyond static constraints by adjusting limits in real time based on contextual signals and behavioral analysis. Instead of enforcing a one-size-fits-all threshold, it leverages historical data, user behavior, and traffic baselines to make intelligent, automated adjustments.

This approach continuously evaluates indicators such as request velocity, session anomalies, and geographic origin to distinguish between normal fluctuations and suspicious activity. For instance, it may automatically increase limits during expected high-traffic hours or tighten restrictions when detecting abnormal request bursts or repetitive access patterns from multiple sources.

Adaptive rate limiting is particularly effective against bot-driven DDoS, API abuse, and credential-stuffing attacks, where adversaries mimic legitimate users. By dynamically optimizing thresholds, organizations can maintain performance, minimize false positives, and strengthen protection without sacrificing user experience.

In essence, while static rate limiting offers simplicity and stability, adaptive rate limiting delivers agility and intelligence, ensuring continuous balance between accessibility and security.

Rate Limiting Strategies

Rate limiting relies on different strategies to monitor and control how requests flow through a system. The right approach helps maintain consistent performance, prevent overload, and ensure fair access for all users.

At the core, there are four primary algorithms that define how requests are measured, stored, and regulated.

Token Bucket

The token bucket algorithm is designed to allow short, controlled bursts of traffic without exceeding an overall average rate. A virtual bucket fills with tokens at a steady pace, and each request consumes one token.

If the bucket still contains tokens, requests are processed immediately. When it empties, excess requests are delayed or dropped until more tokens are added. This approach is ideal for APIs or applications that experience occasional spikes but need to maintain a consistent long-term flow.

Leaky Bucket

The leaky bucket algorithm is best for converting unpredictable bursts into a steady, manageable stream of requests. Each incoming request enters a queue (the “bucket”) that processes requests at a fixed rate. If the bucket fills up, new requests overflow and are discarded.

This ensures downstream services, such as databases or backend APIs, are not overwhelmed, maintaining predictable performance even under heavy traffic.

Fixed Window Counter

This is the simplest and most memory-efficient rate-limiting strategy. It counts how many requests occur within a fixed time window (e.g., 100 requests per minute). Once the limit is reached, new requests are blocked until the next window begins.

While easy to implement, this method can permit short bursts of excess traffic at the boundaries of time windows, since counters reset at fixed intervals.

Sliding Window (Log and Counter Variations)

The sliding window approach offers the most accurate and fair enforcement of rate limits by continuously evaluating requests within a moving timeframe.

- In a sliding log, every request timestamp is recorded, and old entries are discarded as they fall outside the window.

- In a sliding counter, request counts are tracked using overlapping intervals to approximate the same effect with less memory.

This strategy smooths out boundary issues and maintains precision, making it suitable for high-traffic APIs where fairness and accuracy are crucial.

AppTrana’s Intelligent Rate limiting

AppTrana uses rate limiting to control traffic spikes, prevent brute-force and bot attacks, and ensure consistent application performance during both normal and peak load conditions.

AppTrana WAAP takes rate limiting beyond static enforcement by combining AI-driven traffic analysis with context-aware adaptive controls. Rather than applying the same limit across all users or endpoints, AppTrana continuously learns from real traffic behavior to dynamically adjust thresholds in real time.

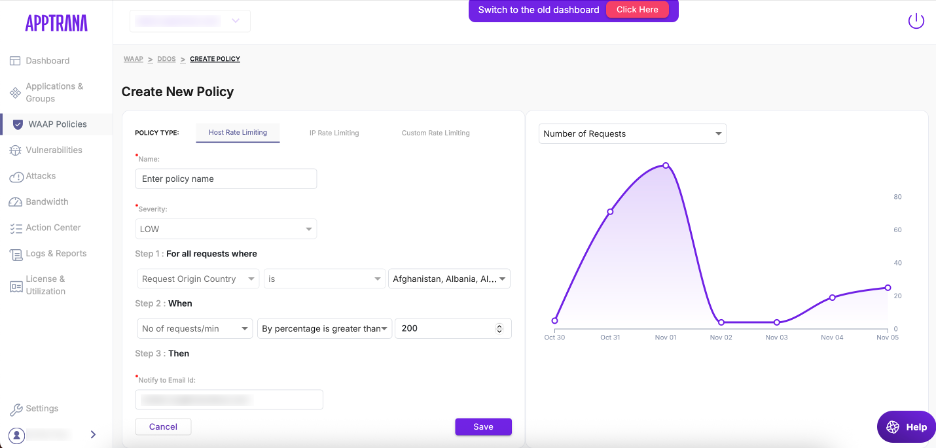

Through its unified interface, organizations can define, monitor, and refine rate-limiting behavior at the host, IP, and custom levels, ensuring that traffic is managed contextually and that DDoS-style overload attempts are automatically contained before they affect availability.

Host Rate Limiting

At the host level, AppTrana focuses on managing the overall traffic volume across entire domains or subdomains. It continuously analyzes aggregate traffic patterns and automatically applies controls when a host begins to receive more requests than normal operating thresholds allow.

This ensures that traffic from a specific region, network, or marketing campaign does not monopolize application resources. Whether it is a planned surge in legitimate users or a sudden flood of automated hits, host-level rate limiting ensures consistent performance across every endpoint and user segment.

It act as a proactive safeguard, balancing accessibility and availability without manual intervention or rigid limits.

IP Rate Limiting

At a more granular level, AppTrana applies rate limiting per IP address to detect and contain abusive patterns. Instead of relying only on raw request counts, AppTrana evaluates multiple behavioral parameters such as cookie validity, session consistency, and request diversity to assess the legitimacy of traffic.

This intelligence helps distinguish real users from automated scripts or malicious bots. For instance, a valid browser session shows consistent cookie behavior, while bots and headless clients often lack these characteristics.

By recognizing such anomalies, AppTrana dynamically throttles or blocks suspicious activity without affecting genuine users.

This approach strengthens protection against brute-force attempts, scraping bots, and high-frequency API misuse, while maintaining low latency for trusted clients.

Custom Rate Limiting

For applications with unique workflows or business logic, AppTrana offers custom rate-limiting rules that extend beyond standard host and IP-based controls.

For example, authentication APIs or payment gateways can be given stricter limits, while general browsing or data retrieval endpoints can remain more flexible. This balance between rigidity and adaptability allows teams to apply precise protection where it matters most, minimizing disruption while maximizing resilience.

Each custom rule is supported by AppTrana’s real-time analytics engine, which continuously visualizes request trends and threshold triggers. This data-driven feedback loop allows teams to fine-tune limits confidently, backed by live evidence of how traffic is behaving.

Unified and Adaptive Defense

All rate-limiting configurations within AppTrana, whether host, IP, or custom feed into a single analytics layer that offers end-to-end visibility into traffic health.

This unified insight helps identify patterns, validate system response, and ensure policies are performing as intended across all assets. Combined with automated blocking actions and contextual intelligence, AppTrana transforms rate limiting from a static control into a dynamic, adaptive defense mechanism.

By continuously learning from live traffic, it keeps legitimate users flowing smoothly while automatically isolating any source that threatens application performance or reliability.

In essence, AppTrana’s intelligent rate limiting provides a balanced defense mechanism, maintaining availability and user experience without compromising security.

Start a free trial to get instant protection against DDoS, bots, credential stuffing, and API abuse with adaptive rate limiting and real-time mitigation.